Understanding the Impact of AI on Computer Hardware

Artificial intelligence (AI) is rapidly transforming various sectors, and its profound influence extends directly to the foundational elements of computing: hardware. This technological shift necessitates a re-evaluation and redesign of traditional computer architecture, pushing the boundaries of processing capabilities, data handling, and overall system efficiency. The evolution of AI is not merely about sophisticated software; it inherently drives innovation in the physical components that power these intelligent systems, leading to specialized designs and advanced manufacturing processes.

The pervasive growth of artificial intelligence is fundamentally reshaping the landscape of computer hardware. As AI models become more complex and data-intensive, the demand for more powerful, efficient, and specialized computing components escalates. This synergy between AI software and hardware development is driving significant innovation across the entire electronics industry, influencing everything from the core processors to storage solutions and connectivity protocols. Understanding this dynamic interplay is crucial for grasping the future direction of computing technology.

How AI is Reshaping Processors and Digital Circuits

At the heart of AI’s impact on hardware lies the evolution of processors and digital circuits. Traditional Central Processing Units (CPUs) are general-purpose, excellent for sequential tasks, but often struggle with the massive parallel computations required for AI workloads like neural network training. This has led to the rise of Graphics Processing Units (GPUs), initially designed for rendering graphics, which excel at parallel processing and have become indispensable for AI. Beyond GPUs, specialized AI accelerators like Neural Processing Units (NPUs), Application-Specific Integrated Circuits (ASICs), and Field-Programmable Gate Arrays (FPGAs) are gaining prominence. NPUs are optimized for inference tasks, ASICs offer maximum efficiency for specific AI algorithms, and FPGAs provide a balance of flexibility and performance, allowing for custom circuit designs tailored to evolving AI models. This drive towards specialized silicon reflects a core tenet of modern AI engineering: matching the computation to the most efficient hardware.

The Evolution of Storage and Memory for AI Systems

AI’s voracious appetite for data has significant implications for storage and memory components. Training large AI models involves processing petabytes of data, demanding not only vast storage capacity but also exceptionally high data transfer rates. High-Bandwidth Memory (HBM) is becoming increasingly common in AI accelerators, offering significantly greater bandwidth compared to traditional DDR memory. Non-Volatile Memory Express (NVMe) Solid State Drives (SSDs) are replacing older SATA-based storage due to their superior speed and lower latency, crucial for quickly feeding data to processors. Furthermore, the concept of persistent memory, which combines the speed of RAM with the non-volatility of storage, is being explored to bridge the performance gap between memory and storage, enabling faster access to large datasets for AI systems. These advancements are critical for managing the sheer volume and velocity of digital information central to AI operations.

Enhancing Connectivity and Displays for AI-Driven Devices

Connectivity solutions are also undergoing significant transformation to support AI workloads. High-speed interconnects like PCIe Gen5 (and soon Gen6) are essential for rapid data exchange between processors, memory, and storage within a single system. For multi-accelerator setups, proprietary technologies like NVIDIA’s NVLink and emerging standards like Compute Express Link (CXL) facilitate low-latency, high-bandwidth communication between GPUs and other components, crucial for distributed AI training. In terms of displays and user interfaces, AI is enabling more immersive and interactive experiences. High-resolution displays are vital for visualizing complex AI outputs, while AI-driven augmented reality (AR) and virtual reality (VR) devices demand specialized hardware for real-time processing of sensor data and rendering intricate digital environments. The development of more powerful and efficient edge AI devices also relies heavily on robust, low-power connectivity solutions to integrate AI into everyday devices.

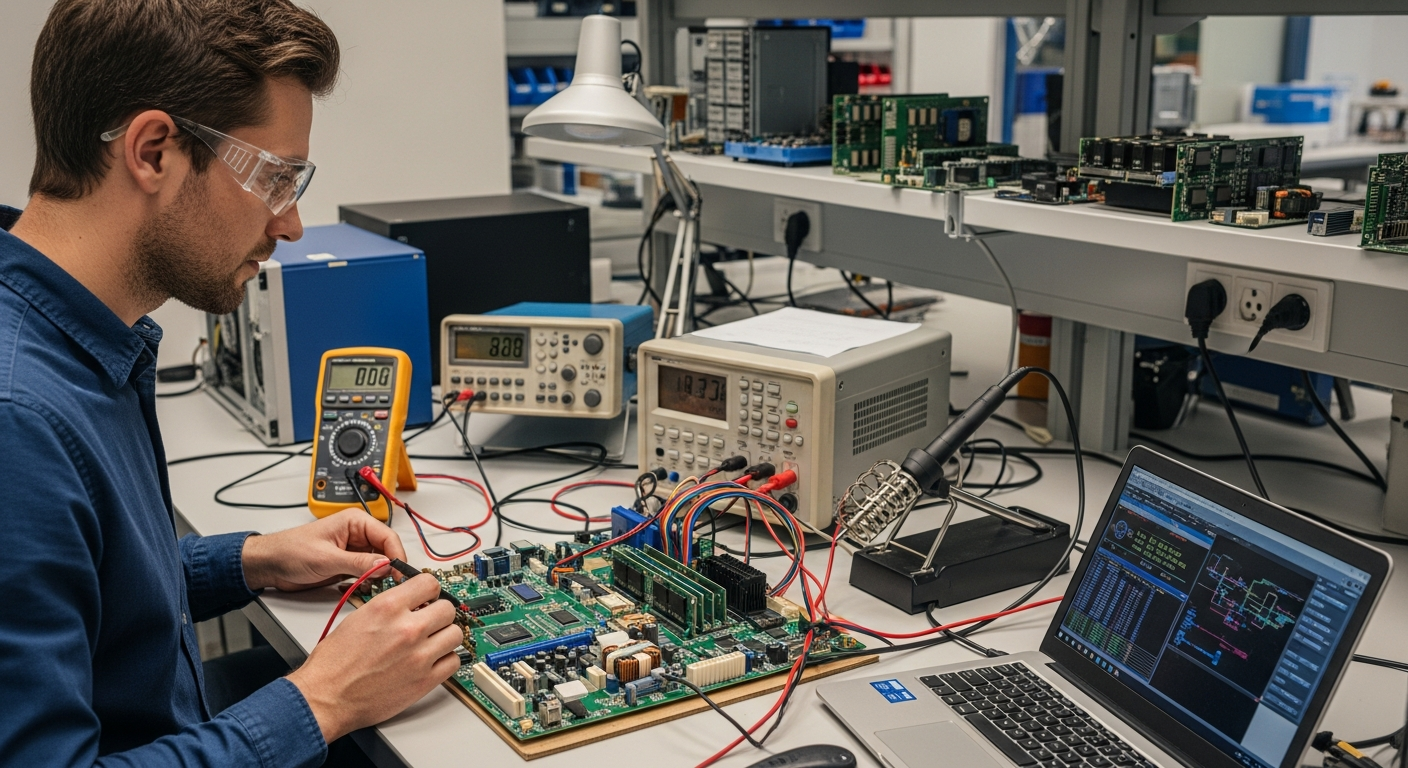

The Role of Specialized Hardware in AI Engineering

AI engineering is increasingly reliant on a symbiotic relationship between software frameworks and underlying hardware. The effectiveness of AI models often hinges on their ability to leverage hardware acceleration efficiently. Software libraries like TensorFlow and PyTorch are continuously optimized to interface seamlessly with various types of AI hardware, from general-purpose GPUs to highly specialized ASICs. This close integration allows developers to maximize performance and minimize training times. Furthermore, the deployment of AI in robotics and other embedded systems requires hardware that can perform inference tasks with low power consumption and minimal latency, often in constrained environments. This drives innovation in compact, energy-efficient processors and integrated systems-on-a-chip (SoCs) designed specifically for real-world AI applications.

Future Trends in AI Hardware Innovation

The future of AI hardware promises even more radical innovations. Research into quantum computing, while still in its early stages, holds the potential to solve certain complex AI problems intractable for classical computers. Neuromorphic computing, inspired by the human brain’s structure and function, aims to create processors that can learn and process information with unprecedented energy efficiency. Optical computing, which uses light instead of electrons, could offer significant speed advantages. Beyond these transformative technologies, ongoing efforts focus on improving the energy efficiency of existing hardware, exploring new materials, and developing sustainable manufacturing practices. These innovations are crucial for sustaining the rapid growth of AI while mitigating its environmental impact, pointing towards a future where computing is both powerful and responsible.

| Hardware Type | Common Providers | Key Features | Estimated Cost Range |

|---|---|---|---|

| GPU | NVIDIA, AMD | Parallel processing, high memory bandwidth | Moderate to High |

| NPU | Intel, Qualcomm | Optimized for AI inference, energy-efficient | Low to Moderate |

| ASIC | Google, Cerebras | Custom-designed for specific AI tasks, high performance | High |

| FPGA | Xilinx, Altera | Reconfigurable logic, flexible for evolving algorithms | Moderate to High |

Prices, rates, or cost estimates mentioned in this article are based on the latest available information but may change over time. Independent research is advised before making financial decisions.

The ongoing evolution of AI continues to exert a profound and transformative impact on computer hardware. From the specialized design of processors to advancements in memory, storage, and connectivity, every aspect of computing infrastructure is being re-imagined to meet the demands of intelligent systems. This relentless pursuit of greater computational power and efficiency not only enables more sophisticated AI applications but also drives a broader wave of innovation across the entire technology sector, shaping the capabilities of future digital systems and devices worldwide.